What we use audio tracing and cloud technology for in Absylon 7

Hi all. Welcome back to the TinyPlay team. We are currently participating in the IndieCup S’21 contest, where we made the finals in the Best Audio category. In this article I would like to share how we work with audio. I hope this article will be useful for you.

Audio architecture

The project consists of 3 components: sounds (footsteps, atmosphere, enemies, weapons, and more), music (atmospheric, action music, extra accompaniment), and voice acting (characters, audio diaries, thunderclaps). The audio architecture is divided as follows:

Sounds:

- The environment and everything associated with it (leaves, wind, creaks of trees, gates, etc.);

- Sounds of enemies (attacking, taking damage, detecting, dying);

- Sounds of footsteps for each of the surfaces (over 200 sounds in total for different physical materials);

- Horror sounds (triggered by triggers);

- Weapons (firing, reloading, etc.);

- Collision sounds for physical objects;

- Sounds of effects (explosions, hits, sparks, etc.);

- Other sounds;

Music:

- Atmospheric background music;

- Action music for certain scenes;

- Tense music;

- Menu music;

Voiceovers:

- Voice-overs for characters in different languages;

- Audio diary voiceovers;

- Switch voiceovers and other voiceovers;

Compression settings for music and sounds are different. Music – streaming, big sounds are loaded and unpacked before the scene is loaded, small sounds – on the fly.

Working with music and sounds. Mixing

To achieve smooth transitions, different sound areas, and work with compression, we use the standard Unity mixer. For smooth transitions, we use DOTween.

Mixers are also switched through special Volume-zones that change the active mixer or its settings to suit the environment (for example, to have an echo effect in the basement).

We use a player based on the animation’s event.

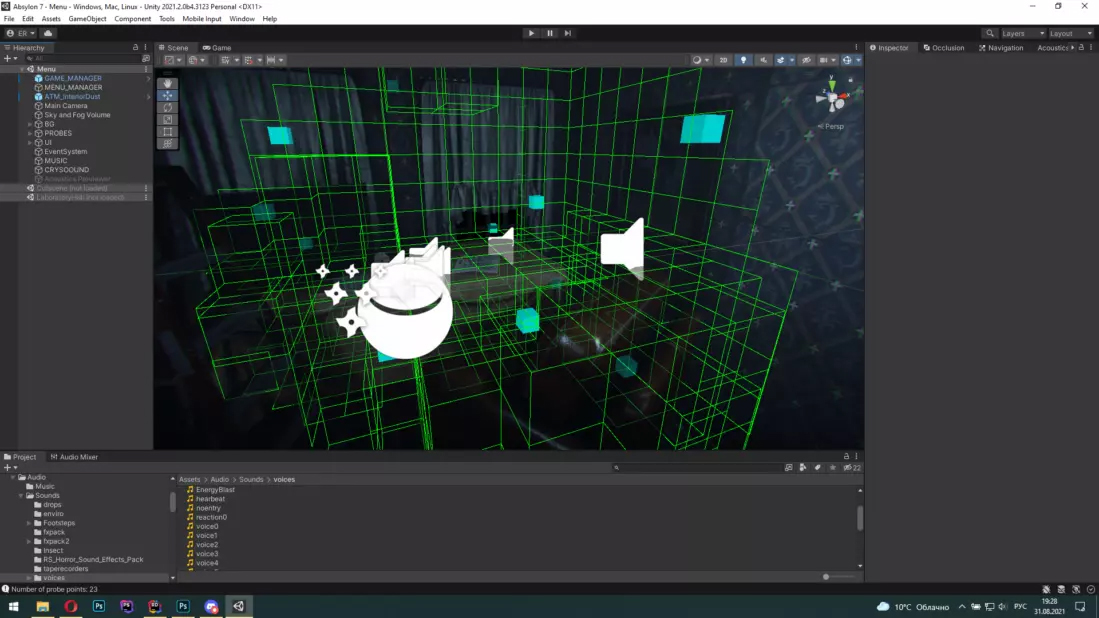

Volumetric sound. Rattling and geometry detection

We went through several options for working with surround and, most importantly, physically-based sound. We tried Steam Audio, FMOD, Microsoft Acoustics, etc.

In the end our choice was FMOD + Dolby Atmos + Microsoft Acousitcs. Why not Steam Audio? Well, we were getting unforeseen crashes in Windows builds from their library, which we couldn’t debug.

What do we use it all for?

1) For automatic calculation of sound propagation in the environment, taking into account its geometry, materials and reflectivity (each object in the game is configured separately);

2) For spatial processing of audio using HRTF. In conjunction with Dolby Atmos, the sound is as natural as possible.

How does it work?

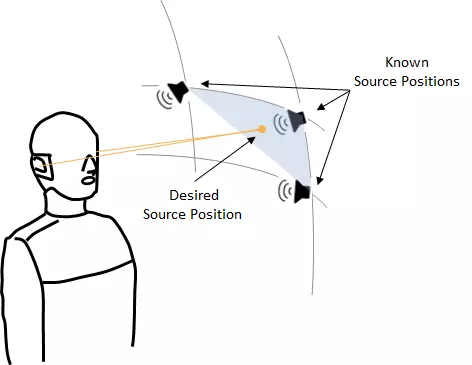

The system takes into account the spatial position of the sound, its receiving source, angle, and dozens of other parameters for correct perception, both on headphones and 5.1 systems.

In addition, the system uses raytracing (real-time ray tracing) to determine collisions and reflections applicable to sound waves. To reduce the load, these calculations are done on the GPU + sound is baked (similar to Light Probes, but for sound).

Cloud computing for baked sound

Baking sound into a scene with thousands of 3D models and millions of polygons is long enough, so we use Azure cloud computing. Fortunately Microsoft has a great tool on virtual machines designed for this – Azure Batch

Using a cloud computing service it is possible to reduce the sound bake time from 2 hours to 10 minutes, which saves a lot of time at a fairly low price (1.5 bucks per hour).

Summary

The combination of these technologies allows us to achieve more or less realistic sound, and it, in turn, can greatly affect the atmosphere of the game.

To see an example of how everything works together you can here (don’t pay attention to the voiceover of the main character, we have already replaced it, but haven’t published it yet):

Get Absylon 7

Absylon 7

A slaughterhouse first-person shooter with travel between universes

| Status | Prototype |

| Author | TinyPlay Games |

| Genre | Action, Shooter, Survival |

| Tags | 3D, Dark, First-Person, Horror, pc, Singleplayer, Unity |

| Languages | English, Russian |

| Accessibility | Subtitles |

More posts

- Absylon 7 - Steam and Pre-OrdersAug 26, 2021

- About Audio Raytracing, DevGAMM and multiplayerAug 20, 2021

- Absylon 7 - Mods SupportAug 18, 2021

- New Sergo's Story TrailerAug 17, 2021

- New Demo Available! (Aug, 16)Aug 16, 2021

- Absylon7 Official Game DiscordAug 11, 2021

- Absylon 7 - DevGamm MoskowAug 10, 2021

- Details of Storyline Development StartedAug 04, 2021

- NVidia DLSS with Unity (Absylon 7 Tests)Aug 04, 2021

Leave a comment

Log in with itch.io to leave a comment.